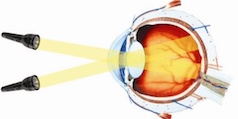

The brain has two methods for encoding information at its disposal. (This is an oversimplification, but probably not a severe one.) One way is by which neurons are active. For example, beginning with the retina, which neurons are responding to light informs the brain about where the light is coming from. This occurs due to the optics of the eye: the light must pass through a small opening, the pupil, in order to reach the retina at the back of the eye. The retina’s photoreceptors peer out at the world through that tiny pinhole, and each individual photoreceptor is only able to view a small portion of the visual scene. The portion that a given photoreceptor can “see” is known as its receptive field.

The retina contains over 100 million photoreceptors (Osterberg, 1935). Each one has a different receptive field, based on its position on the retinal surface and thus what direction light must come from in order to pass through the pupil and be absorbed by that photoreceptor. Across the population of all photoreceptors, the spatial layout of light present in the visual scene is reproduced as a spatial layout of activation in the array of photoreceptors.

So the code the brain receives is something like this:

The presence/absence of visual stimuli at different locations in the visual scene can be signaled by the presence/absence of activity in neurons at those corresponding retinal locations.

The presence/absence of visual stimuli at different locations in the visual scene can be signaled by the presence/absence of activity in neurons at those corresponding retinal locations.

Light from the top flashlight hits the lower portion of the retina. Light from the bottom flashlight hits the upper portion of the retina. Thus, where on the retina neurons are responding to light indicates to the brainwhere the light is coming from.

Light from the top flashlight hits the lower portion of the retina. Light from the bottom flashlight hits the upper portion of the retina. Thus, where on the retina neurons are responding to light indicates to the brainwhere the light is coming from.

Again, a gross oversimplification – neurons are not either “on” or “off” – they have graded response patterns that can vary continuously within a range – but the point here is that if you were a Blind Martian and you couldn’t see for yourself but you had some science-fiction-y ability to monitor the activity of the retinal neurons of us Earth Creatures, you’d know quite a lot about the presence and location of light in the scene just from knowing which neurons were active.

One reason I’ve made this on/off oversimplification is to set up an analogy with electronics and computing. This which-neurons-are-on kind of code resembles the binary digital format used in so many types of electronic devices.

In short, we can think of the brain’s which-neurons-are-on coding as its form of digital coding. But many electrical devices are analog. The magnitude of some signal, such as voltage, scales continuously with some kind of information to be encoded.

The brain also uses analog coding: neural activity can vary continuously between being completely silent and discharging action potentials at a rate of hundreds per second. If a neural digital code is which-neurons-are-on, a neural analog code is how-on-are-they. So, trivially, you can rethink the above discussion substituting finer-grained values to replace the 0’s and 1’s. But more importantly, the level of activity of neurons can be meaningful in and of itself. And different kinds of codes can be used in different contexts.

We’ve been investigating the coding format used by the auditory system. Does the brain use a digital, which-neurons-are-on, format for encoding sound location, or does it use an analog, how-on-are-they, format? The auditory system has to be clever to determine where sounds are coming from. No physical mapping of sound location onto neuron location occurs in the ear. Instead, the brain infers the location of sounds by comparing what each ear hears. A sound located to the right will be slightly louder in the right ear than in the left ear, and it will arrive in the right ear sooner by a tiny amount – not even a fraction of a second, but a fraction of a millisecond! How much louder, and how much sooner, varies with the direction the sound is coming from. The biggest differences occur when the sound is all the way to the left or right, and there is no difference when a sound is straight ahead (or behind).

In short, the information from which the brain has to guess the location of sounds is not itself spatial, as it is in the visual system, but is based on magnitude of interaural timing and level differences. And, interestingly, we have found that in two early stages of the primate brain, the sound location is encoded in neural activity primarily in an analog format. Most neurons discharge for most sound locations, but the amount of activity that they exhibit depends on the location of the sound. The greatest activity occurs for sounds near the axis of one ear or the other. A Deaf Martian could get a pretty good idea about where sounds are coming from (in the horizontal dimension) by monitoring the relative activity of left-preferring vs. right-preferring neurons.